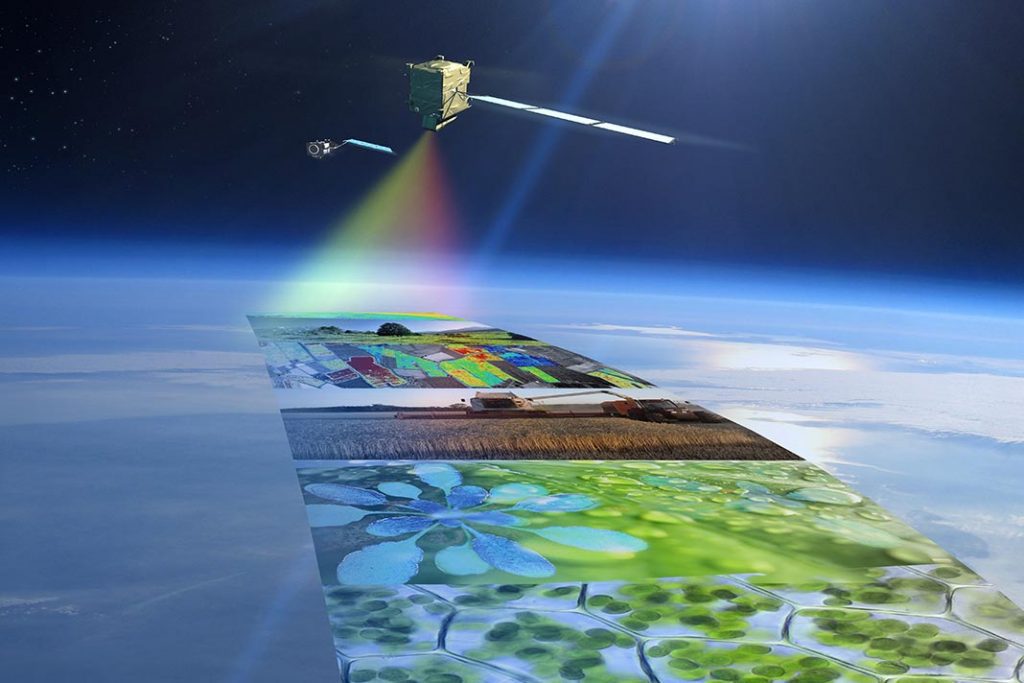

Generative artificial intelligence (AI) models are transforming various domains, from natural language processing (NLP) to image generation. As these powerful models continue to advance, addressing their environmental impact is crucial for a sustainable future. This article delves into the environmental challenges posed by generative AI models and provides actionable steps to make them more eco-friendly.

Related articles:

- Environmental Big Data for Climate Change: Benefits, Challenges, and Applications

- Tackling Climate Change with Cloud-Based Data Analytics

The Environmental Impact of Generative AI Models: Carbon Footprint and Energy Consumption

Carbon Footprint of Generative AI Models

The carbon footprint of generative AI models can be attributed to several factors, including the energy consumed during model training, inference processing, and manufacturing computing hardware.

Model Training

Training large language or deep learning models demands substantial computational resources and energy consumption. For instance, the training process of models like OpenAI’s GPT-4 or Google’s PaLM reportedly generates approximately 300 tons of CO2 emissions, equivalent to multiple trans-Atlantic flights.

Inference Processing

While energy consumption during inference is relatively lower per session, the cumulative impact over multiple sessions in cloud-deployed models is significant. Inference processing can account for 80-90% of the energy cost of neural networks.

Computing Hardware and Data Centers

The manufacturing and maintenance of powerful GPU chips and servers used to run AI models also contribute to their environmental impact. The energy consumed during the production of computing devices plays a significant role in their overall energy usage.

Energy Consumption of Large Generative AI Models

Understanding the energy consumption of generative AI models throughout their life cycle is vital for evaluating their environmental sustainability.

Energy-Intensive Training

As stated in a research article, the latest generation of generative AI models necessitates a ten to a hundred-fold increase in computing power for training compared to their predecessors, varying based on the specific model. Consequently, the overall demand for computing resources is experiencing a doubling approximately every six months.

Inference Processing Energy Consumption

Ongoing usage in cloud-deployed models accumulates a significant energy footprint. Implementing efficient deployment strategies can help mitigate this environmental impact.

Fine-Tuning and Prompt-Based Training

Refining existing models through fine-tuning or prompt-based training consumes less energy and computational power compared to training from scratch while offering tailored content aligned with specific organizational needs.

Energy Cost of Manufacturing AI Hardware

AI hardware manufacturing processes are energy-intensive. Approximately 70% of a computer’s energy usage is incurred during its manufacturing, making the energy requirements for AI hardware even higher.

Making Generative AI Greener: Steps Towards Environmental Sustainability

To promote environmental sustainability in generative AI, consider taking the following steps:

1. Leverage Existing Large Generative Models

Utilize large language and image models provided by established vendors or cloud providers instead of creating new models from scratch.

2. Fine-Tune Existing Models

Refine existing models through fine-tuning and prompt-based training, reducing energy consumption while aligning content with specific needs.

3. Adopt Energy-Conserving Computational Methods

Explore energy-conserving computational methods like TinyML, enabling deployment on low-power edge devices for significant energy savings compared to traditional CPUs and GPUs.

4. Strategically Use Large Models

Evaluate the value added by larger, more power-hungry models, ensuring that the benefits justify the additional environmental impact. Thoroughly research and analyze alternative solutions before resorting to generative AI.

5. Prioritize Impactful Applications

Identify relevant and impactful applications, prioritizing those with significant environmental and societal benefits. Balance the usefulness of generative AI with its potential environmental impact.

Conclusion

As generative AI continues to revolutionize various domains, addressing its environmental impact is essential for ensuring a sustainable future. By leveraging existing models, refining them through fine-tuning, adopting energy-conserving computational methods, using large models strategically, and prioritizing impactful applications, we can harness the potential of generative AI while mitigating its ecological footprint. Embracing these responsible actions will allow us to enjoy the benefits of this powerful technology while safeguarding our planet.

Next Steps

Round Table Environmental Informatics (RTEI) is a consulting firm that helps our clients to leverage digital technologies for environmental analytics. We offer free consultations to discuss how we at RTEI can help you.